Considerations for Migration and Merge of Orgs

Introduction

Migrating or merging environments in Salesforce might seem like something that does not require that much time. It’s also a great opportunity to do some cleanup, lighten up some legacy processes, and update your instance to meet your current needs. You may think that since a functionality is already working well in one environment, then you should be able to just push it to another one and that’s pretty much it. But there’s a lot of very cumbersome technicalities that need to be taken into account that make this move more complex than creating a feature from scratch. In this post we’ll try to shed some light on the most important points to consider when migrating large sets of features to another instance. All of these notes are applicable not just for complete org migrations, but also for any deployment of a feature from one environment to another, merge of different orgs into one, or split of one org into several orgs.

Difference Between Data and Metadata

When we talk about migrating or merging environments, you need to have a clear understanding of what you are moving. Data is the information you store about your business. Metadata is the structure of such data, in Salesforce this would include the definition of the objects, fields, page layouts, list views, processes, components, etc. that provide the functionality in your org to interact with your data. Here we’ll focus on the migration of metadata which is usually more complex than the migration of data, and you cannot migrate data without migrating the metadata first. So let’s get to it.

How to Move Metadata Between Production Environments

❌ Change Sets: they are only valid deployment methods between a production environment and its connected sandboxes and between connected sandboxes created from the same production environment. Production instances are not connected to one another in a way that would allow metadata to be pushed via change sets.

✅ Packages: they are the declarative way to create bundles of metadata that you can create in your source production environment and install in your destination production environment. They are the “change sets” for production to production deployments. The only issue is that some metadata items may not be packageable.

✅ Deployment API: this is the most complete way to group and deploy metadata between production environments. It may require installing software on your computer and need someone with higher technical skills, since you may need to create xml files or have knowledge of where the metadata resides in a folder structure. Examples of tools that use the metadata API are: Workbench, Visual Studio, Deploy Using the Ant Migration Tool.

Step 1: Make an Inventory of Each Environment

In order to know what to package you need to know what you have. This requires an analysis of both your environments (source and destination) to keep track of what you have in each. This is an extensive step since you have to go through all of your metadata items one by one and list them for each environment: every object, every field, every record type, every list view, every report, every dashboard, every field set, every workflow rule, every email alert, every field update, every process builder, every flow, you get the idea…

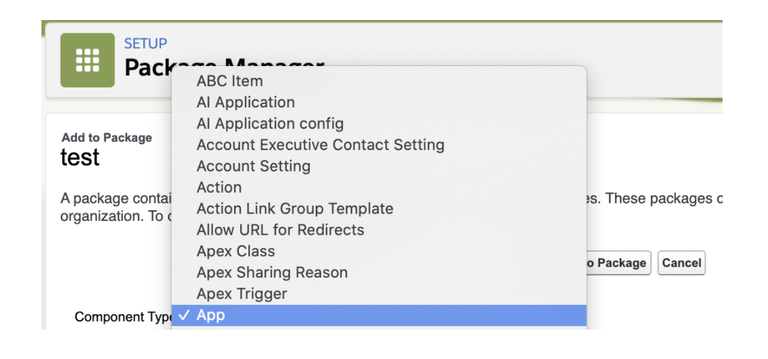

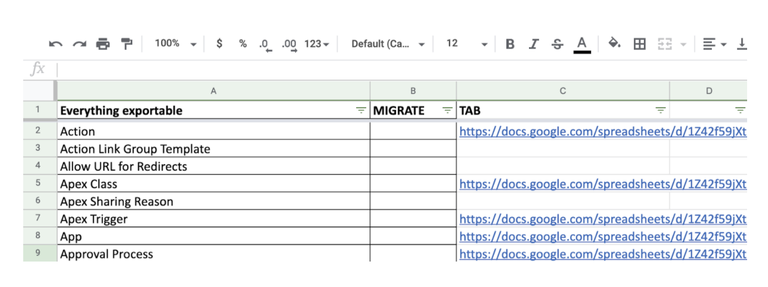

You can use tools to compare all the metadata by downloading it from both environments and do a diff (comparison file by file), but those are not always straightforward to really tell if something is the same or not in both environments (as we’ll see in later steps). I wish there was an easy way to do this, but there’s not one I can find other than taking the time to put together a spreadsheet with all your exportables. Spreadsheets are your best friend in this case, since you will have to record your findings at a very granular level. We recommend you create a basic spreadsheet with tabs for each exportable. To get the list of what can be packaged, go to Setup, search “Package Manager”, create a Package with any name and then click Add:

The “Component Type” drop-down will show you everything that is exportable in an org. Use the values in that drop-down as your master list of metadata you need to review:

Then create a tab for each one of the exportables (objects, custom fields, etc.):

And repeat for each environment. Only having the destination environment of a brand new clean org will cut your job to about a third (because there’s nothing to worry about overwriting in the destination), otherwise, you’re in for quite a ride.

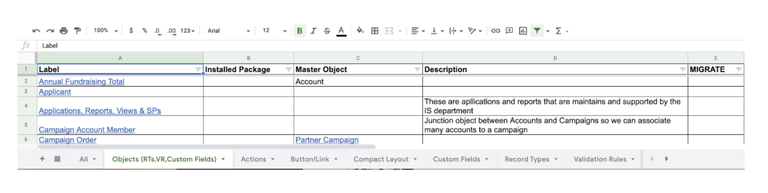

To easily get a list of whatever metadata you want, go to the Classic View in Salesforce, since it’s easier to select and copy into a spreadsheet. For example: to get a list of Custom Objects:

- Go to Setup > Custom Objects

- Select all objects and copy

- Go to Excel and paste the list

- Unmerge the cells (sometimes the paste merges some cells)

- Filter by the Installed Package column to display the ones that do not belong to an installed Appexchange package.

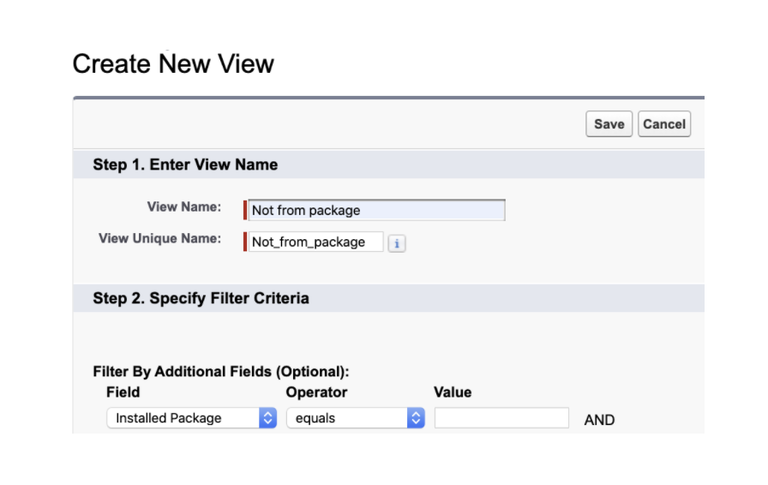

For anything other than objects, fields and validation rules you can create list views to only review the metadata that does not belong to installed packages. This way you save yourself the step to filter out what comes from those packages, since can’t package something that comes from an installed package anyway.

Custom or Standard?

The only items you do not have to worry about analyzing are:

- Standard objects, fields or anything that already exists in Salesforce by default and cannot be customized, for example: the Opportunity object (the object itself, not it’s custom fields), the Close Date and all standard fields, etc. These are not packageable anyway in the first place because there’s nothing you can change in their definition.

- Anything in a package from the Appexchange. Packages will have to be reinstalled in your destination org if they aren’t already there and you want to keep their functionality.

Note that if you, for example, created a validation rule on a standard field, the standard field will already be in your destination org as it’s part of Salesforce and does not have to be packaged and migrated, but the validation rule itself will have to be packaged and migrated if you want to keep it in your destination org as that’s a customization. The same principle applies to values added to standard picklist fields, the field will be in the destination org, but not the custom values that did not come with the original org. Unfortunately individual values of a picklist cannot be added to a change set or package, so you’ll have to do it manually. This goes as well for the assignment of picklist values to specific record types.

Step 2: Compare Your Inventories

Now that you have your current status for each one of your orgs, you need to compare them to figure out if they are already in the destination org or not.

What Makes a Metadata Item the Same?

There are two things you have to take into account for two metadata items to be considered the same: the API Name and concept behind the metadata item. This is applicable to any metadata item: fields, objects, record types, process builders, flows, etc.

API Name (sometimes referred to as “Developer Name”)

It’s the unique identifier of a metadata item in an org. Labels are just to show to the end user, but it’s the API Name that makes it uniquely identifiable. For example: two fields can have the same label but different API Names in the same org. Likewise, two fields cannot have the same API Name in the same org, but a field can have the same API Name in two different orgs.

For example, if you are pushing a field from a source org to a destination org (from and to the same object) the following would be true:

Case 1: If the API Names are the same, even if the Labels are different, Salesforce considers them the same field, because of the same API Name, and the rest of the information about the field (the Label for example) is overwritten with the new information. The object will have the version of the field that just came in from the source org.

|

Source Org |

Destination Org |

After Deployment to Destination Org |

|||

|

Label |

API Name |

Label |

API Name |

Label |

API Name |

|

My new field |

the_field__c |

My old field |

the_field__c |

My new field |

the_field__c |

Case 2: If the API Names are different, even if the Labels are the same, Salesforce considers them different fields, because of the different API Name and the field from the source will be created as a brand new field in the destination org with that information. The object will have both fields, the old one that already had it and the new one that just came in from the source org.

|

Source Org |

Destination Org |

After Deployment to Destination Org |

|||

|

Label |

API Name |

Label |

API Name |

Label |

API Name |

|

My field |

the_new_field__c |

My field |

the_old_field__c |

My field |

the_old_field__c |

|

My field |

the_new_field__c |

||||

Concept of a Metadata Item

Two metadata items can have the same API Name (in different orgs), which technically Salesforce will consider the same when deploying. BUT what they represent or how they are used could be different. For example:

- In the source org the field with label “Needs Follow-up” and API Name “Needs_Follow_up__c” in the Opportunity object is checked by a user when the Opportunity will need to be reviewed a week later (there’s a process configured that will take all records with this field set to true and haven’t been modified in 7 days and send out an email notification to the Opportunity owner).

- In the destination org the field with label “Needs Follow-up” and API Name “Needs_Follow_up__c” in the Opportunity object is checked by a process when the Opportunity needs to be reviewed because 30 days have passed since it was last modified.

In this example, the field is technically the same because of the API Name, but the way it’s used is completely different, which means they are not the same conceptually. Pushing any process that uses this field from the source org to the destination org will either:

- overwrite the process in the destination org leaving only the process that comes from the source org

- OR end up with two inconsistent processes in the destination org (the one that already existed in the destination org plus the process from the source org)

And either way, when executing those processes it will likely leave data inconsistent or just break any functionality that uses this field in the destination org.

This also applies to processes: two process builders (or approval process, or flows, or any other automation type of metadata item), one in each org, can have the same API Name but do completely different things. So the analysis gets more complex when you need to drill down into the actual steps of each process and all its subsequent processes, subprocesses and actions.

The way something works is particularly important when analyzing components such as scheduled jobs, batch jobs, Visualforce pages, Lightning Components, which require to analyze code to see what they do and whether that is something that is already available in the destination org or not.

Case Study: Field Mapping Compatibility

A special consideration can be when you have the same field with the same API Name and same or similar concept. You may still have issues with the type of field that may be different in each org. This may prevent you from migrating functionality or data that uses this field.

There are many types of fields you can define in Salesforce:

- Checkbox

- Date

- DateTime

- Number

- Text

- Picklist

- Lookup/Master-Detail

- Currency

- Percent

- Phone

- Time

- Geolocation

- Any other type

But not all of them can be converted from one into another type. Here are some of the possibilities you can encounter:

Field Type Mismatch

If you have a field of a certain type in the source org that should replace or be copied to a field of another type in the destination org, depending on the combination it could work or not:

|

From |

To |

Result |

|

Text |

Text |

Works as long as the origin text is no longer than the maximum allowed by the target field. |

|

Text |

|

Works only if the origin text is formatted as an email, otherwise you get an error. |

|

Text |

Number |

Works only if the origin text is a number, otherwise you get an error. |

|

Text |

Picklist |

Works only if the value of the origin text is one of the possible values of the picklist field, otherwise you get an error. |

|

Text |

Lookup |

Works only if the value of the origin text is an id of the type of object that the lookup field looks up to. |

|

|

Text |

Works all the time. |

|

|

|

Works all the time. |

|

|

Number |

Never gonna work. |

|

|

Picklist |

Works only if the value of the origin email is one of the possible values of the picklist field, otherwise you get an error. |

|

|

Lookup |

Never gonna work. |

|

Number |

Text |

Works only if the length of the number is less than the length of the text. |

|

Number |

|

Never gonna work. |

|

Number |

Number |

Works all the time, may truncate if the decimals allowed don’t coincide in the target field. |

|

Number |

Picklist |

Works only if the value of the origin number is one of the possible values of the picklist field, otherwise you get an error. |

|

Number |

Lookup |

Never gonna work. |

|

Picklist |

Text |

Works only if the length of the picklist value is less than the length of the text. |

|

Picklist |

|

Works only if the origin picklist value is formatted as an email, otherwise you get an error. |

|

Picklist |

Number |

Works only if the origin picklist value is a number, otherwise you get an error. |

|

Picklist |

Picklist |

Works only if the value of the origin picklist is one of the possible values of the picklist field, otherwise you get an error. |

|

Picklist |

Lookup |

Works only if the value of the origin picklist is an id of the type of object that the lookup field looks up to. |

|

Lookup/Master-Detail |

Text |

Works only if the length of the text is at least 15 characters (which is the minimum length of an Id). |

|

Lookup/Master-Detail |

|

Never gonna work. |

|

LookupMaster-Detail |

Number |

Never gonna work. |

|

Lookup/Master-Detail |

Picklist |

Works only if the value of the origin lookup/master is one of the possible values of the picklist field, otherwise you get an error. |

|

Lookup/Master-Detail |

Lookup |

May work if both fields look up to the same type of record. |

Numbers act similarly to Currency, Percent, and Phone. Text acts similar to URL and Text Area. Checkbox can only be converted from and to Text or Picklist types if the values are either “TRUE” or “FALSE”. Autonumber can be converted to Text or Number if the format of the Autonumber matches, but nothing can be converted to Autonumber (it’s non-writable by default). Same with Formula fields, they can be converted to other types (if the values match), but no field type can be converted into a Formula. DateTime can be parsed and transformed into separate fields for the Date and the Time and vice versa. Time and Geolocation are even more special cases, likely they are never gonna work to be treated as any other type (maybe if they are transformed into its text version or picklist, but you may lose information and/or functionality).

Picklist Value Mismatch

If you map a picklist field in the source org with a picklist field in the destination org, that is not enough. Both fields need to have the same picklist values, otherwise when you try to save data in them you may get a “bad value for restricted picklist field” error.

|

Values in Type of Work in source Model object |

|

General Info |

|

Outreach/Public Ed |

|

Volunteer Support |

|

Other |

|

Values for Work Type in destination Model object |

|

No |

|

Yes |

So it’s not enough that the types of field match, in the case of picklists, they also need to have the same values or convert to a unified list of values as the final version. This also has consequences for any process that uses the picklist values, particularly Sales Processes that use the Stage field values of the Opportunity object.

Text to Email Mismatch

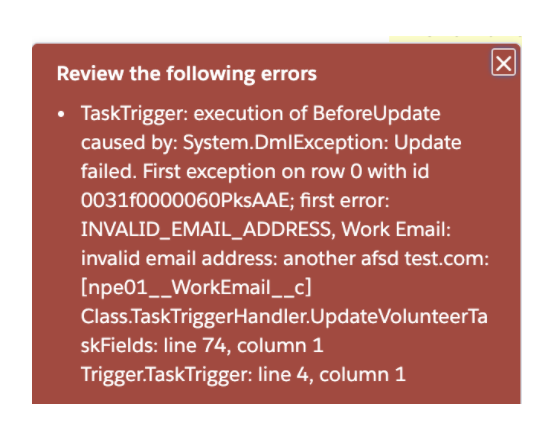

You could copy a text field from the source org to an Email field in the destination org, but only when the value of the text field is email formatted. Otherwise you will get the “invalid email address” error when migrating functionality:

Step 3: To Migrate or Not to Migrate?

So now, if somehow you did not get lost into the rabbit hole of the comparison of your environments, it’s time to decide what to keep and toss. In your spreadsheet you can annotate what to do for each metadata item considering your analysis. Some items will be easy to identify and decide, and some others will require further discussion. A lot of this will have to include business stakeholders who will have to decide what they would want the destination environment to look like in the end (“Does it spark joy?”).

Step 4: Pick, Merge or Recreate

Once you’ve decided what to keep, you may notice that some of the items to keep have the same API Name but are not the same concept. Or they are the same concept but have different API Names. Or that some of the processes you want to keep may contradict each other with conflicting actions. So this is the time to decide what to do with what you want to keep:

- Pick one of the metadata items (either the one in the source org or the one in the destination org) as the final version.

- Merge existing metadata items (from the source org and the destination org) to create the final version.

- Create a brand new version of the process as the final version.

This is also a step that will require business owners to decide how the final version should work.

Step 5: Migration Plan

The migration itself is not something that will be done in one step either. Just as you likely will have to also migrate data, you will have to alternate the deployment of metadata with the deployment of data as a test to make sure the data can eventually be migrated completely. You may want to try this plan in the sandbox of the destination org first. Some of the steps may be done in parallel and some will have to follow the completion of a previous step if there’s any dependency. During the migration you should make sure that no new metadata is added to the source org so that you don’t have to reanalyze everything again. Here’s an overview of a plan and why this is a good way to go:

- Migrate data model (objects and fields and record types).

- Install Appexchange packages without configuring them. See the item 4 as to why not configuring them ye

- Migrate users (activated but not with the set password email sent out).

- All usernames will have to be new in the new instance to not create conflict with the usernames of the old instance (Salesforce does not allow repeated usernames within their login.salesforce.com domain).

- Another approach is to wait until the old instance is decommissioned and then you can recreate the users with their old usernames in the new instance. The issue is that if you want to keep some data migrated with lookups to specific users, then the users need to be migrated before the data is migrated.

- Migrate MyDomain:

- Create a new one for the destination org

- OR rename the old one for your source org (for example: from “mycompany” to “mycompanydeprecated”) and then reuse the name for your destination org as it becomes available. See more information here Rename Your My Domain.

- Just like with usernames, domains cannot be duplicated, so you may have to either:

- Make sure that all references are switched to the new domain when migrating. See more information here Update Your Salesforce Org for Your New Domain.

- Migrate data:

- To migrate we only need the data model (objects and fields and record types), no need to have validation rules, workflow rules, triggers, etc executing during the data migration since these are not necessary for legacy data. Even within one org, old data that was valid a year ago may no longer be valid now due to new validation rules or processes enabled recently, but the data is still valid historical data for the time it was created. So you want all data in your new environment as if it had been there in the conditions when it was originally input. If the data made it in at the time it was input, it complied with the validations of such time and should not be altered just to comply with new validations so that it can be stored.

- Migrate extended data model (validation rules, buttons, page layouts, list views, tabs, reports, dashboards, CMDT, DLRS, static resources, custom settings, custom metadata types, profiles, roles, everything else that is not automation).

- Configure Appexchange packages installed in step 2.

- Migrate small functionality (declarative automation: workflow rules, process builders, flows, approval processes, etc).

- Activate all validation rules, workflow rules, process builders, etc.

- Migrate big functionality (Apex classes, Visualforce pages, Apex triggers, Lightning components).

- You might need to update your test methods in order to make the code deployable if your test classes are not currently passing.

- Regression testing:

- Make sure all features are working as expected with data entered from now on.

- This is to guarantee all desired metadata has been migrated and all conflicting processes are resolved.

- This should include the team of stakeholders that decided which processes would make it into the new org.

- Freeze old org:

- Disable users in old org. The old org will no longer be accessible to end users other than System Administrators so that the new org can go live.

- Migrate any data that was input (created or modified) in the old org between step 4 and now to the new org. You may want to have everything ready to be deployed beforehand so that the window of time between steps 4 and this one is as narrow as possible to avoid having to sync a lot of data changed during this time.

- Enable users in new org:

- Now all users will be able to set their password and log in to the new environment with all data and the features selected as final.

- Connect any 3rd party integrations to your new org. Update the credentials, certificates or anything used by an external system to connect to Salesforce to point to your new production environment.

- Retire old org:

- Now the old org can be let go.

- All metadata and data should be backed up before this org is completely disabled by Salesforce.

The Takeaway

As you can see, migrating or merging environments in Salesforce is a time consuming process that requires a lot of detailed analysis from the top down. You can’t assume that just pushing something from org to the next is going to work or that it’s not going to break something else. And the longer your Salesforce instance has been around, the higher the complexity of your environment will be, and the more you will have to drill down to do your analysis.

It requires a lot of experience in Salesforce and a lot of knowledge of the business owners in terms of what their processes are to be able to understand why something is done the way it is. So counting on a multidisciplinary team and plenty of time (and patience) is key to get it done right.

It’s also a great chance to document what you have and how it works. Many times not everything is properly documented (technically -how it is done inside — and/or as a user -how it works-), so all the time you spent doing your analysis can have the additional purpose to leave guides for current and future administrators, developers and users.

Resources

Here are some resources to learn more:

- Salesforce.com Org Compare Tool

- Change Sets

- Package and Distribute Your Apps

- Build Apps Together with Package Development | Salesforce Trailhead

- Metadata Deployment with Workbench

- Develop and Deploy Using Salesforce Extensions for Visual Studio Code

- Deploy Using the Ant Migration Tool

What do you think of this summary? Is there anything else that should be brought to attention when migrating/merging orgs? Tell me all about it in the comments below, in the Salesforce Trailblazer Community, or tweet directly at me @mdigenioarkus. Subscribe to the Arkus Newsletter here to get the top posts of the Arkus blog directly to your inbox.